Detecting attention to videos

Can we determine what a person is paying attention to on a screen? We are testing an experimental paradigm to determine how well we can decode which of two videos a person is watching. The long-term applications include giving people feedback when they get distracted (ideally to help train people to have more control over their own attention) and translation to “real world” attention detection outside of the laboratory.

Guiding augmented reality systems

Guiding augmented reality systems

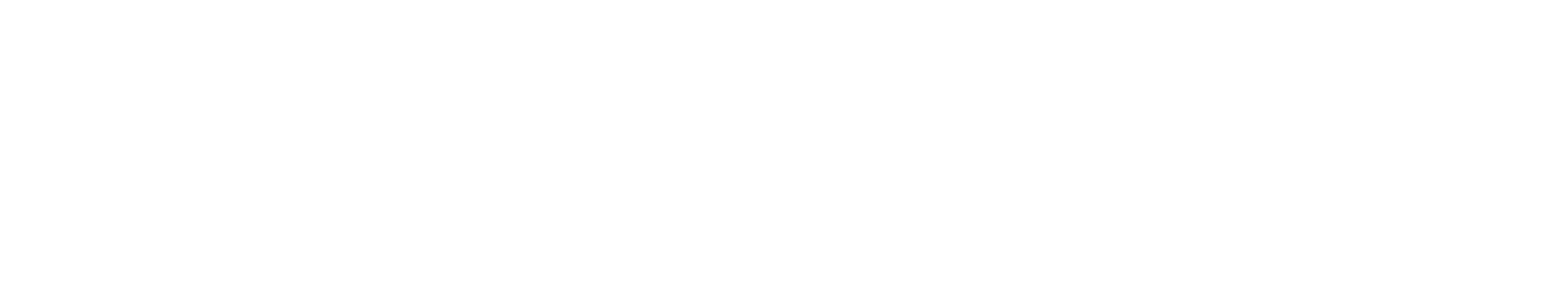

Augmented, virtual, and mixed reality systems have great potential to provide us with useful information in real time, but also risk problems with distraction and safety. We are interested in improving user interfaces for AR/MR by understanding how people pay attention in semi-natural environments. This work involves classifying people’s cognitive state, such as their wakefulness, cognitive load, or attentional focus. We look to combine tools like eye tracking, environment monitoring, EEG, and other bio-sensors. Output from these systems can be used to design better user interactions and to provide live feedback to customize user experiences.

Weeping angel: human-machine interface

Weeping angel: human-machine interface

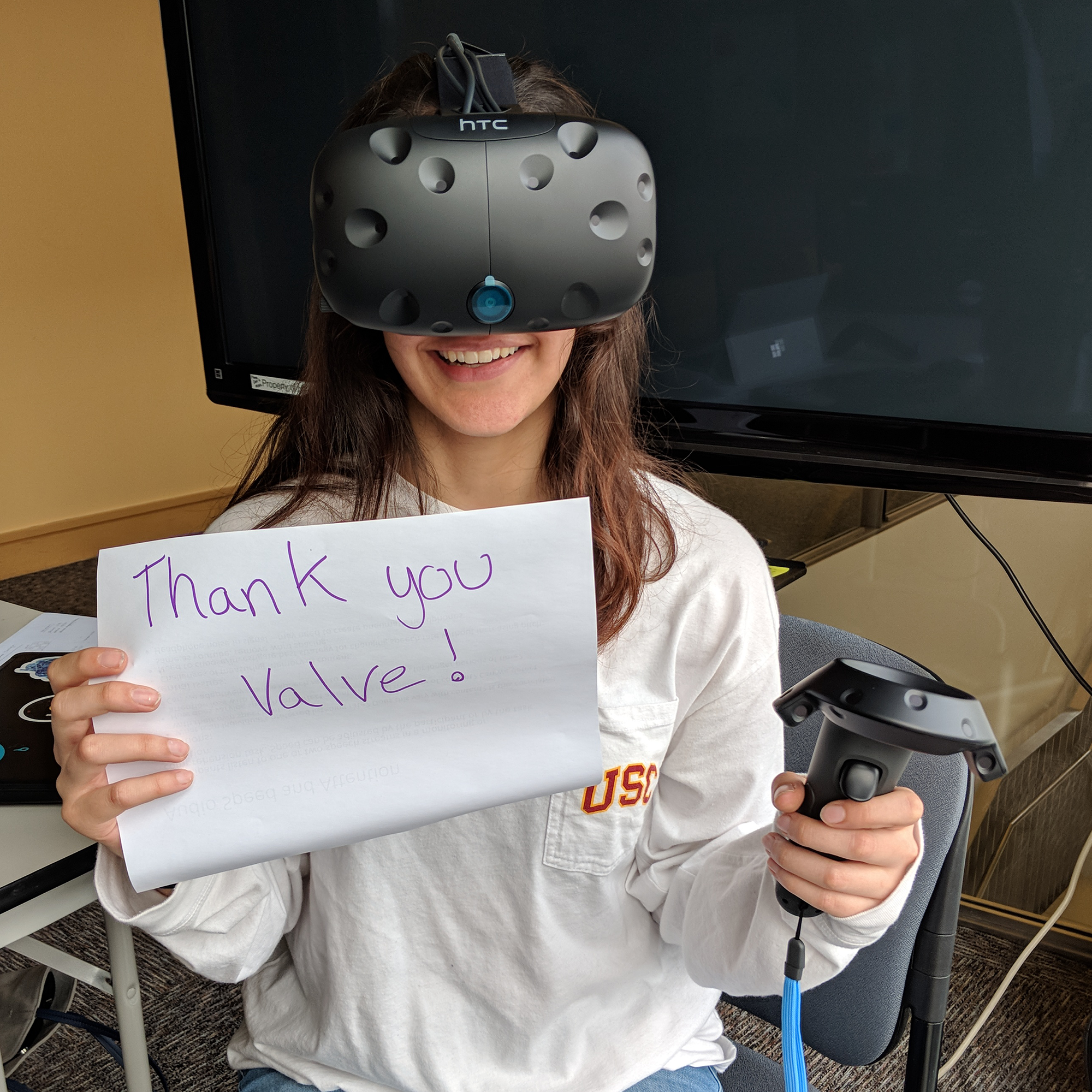

Control a robot just by looking at it. This project is based on the creepy weeping angels from Dr. Who or the childhood game of red light, green light. Using an inexpensive EEG/EMG headset, our system aims to detect when you blink or close your eyes and signals to the weeping angel robot to drive toward you. This project combines signal processing, machine learning, and robotics.

Iris recognition

Iris recognition

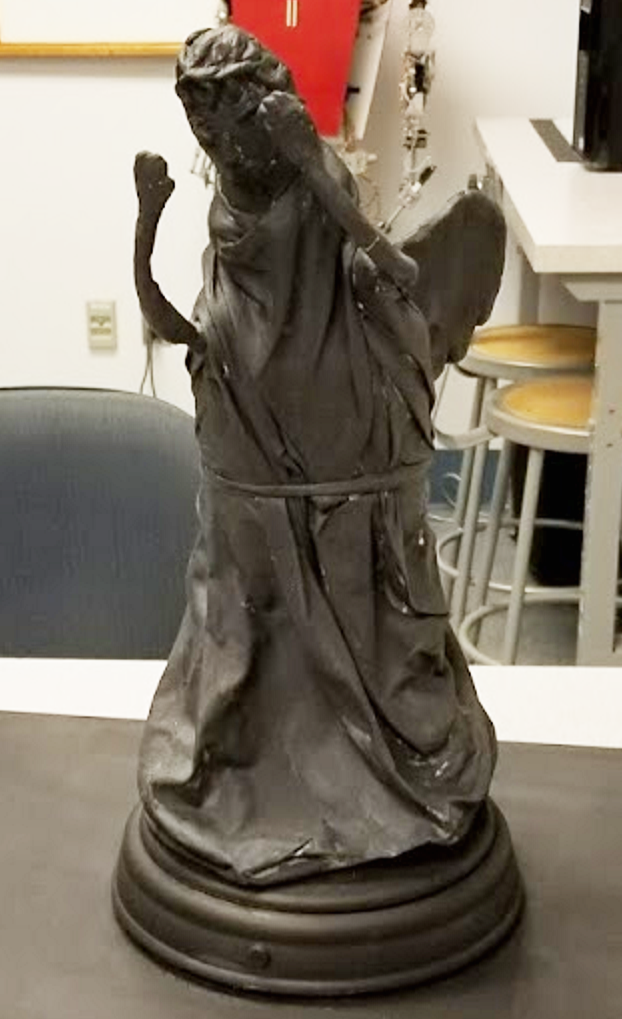

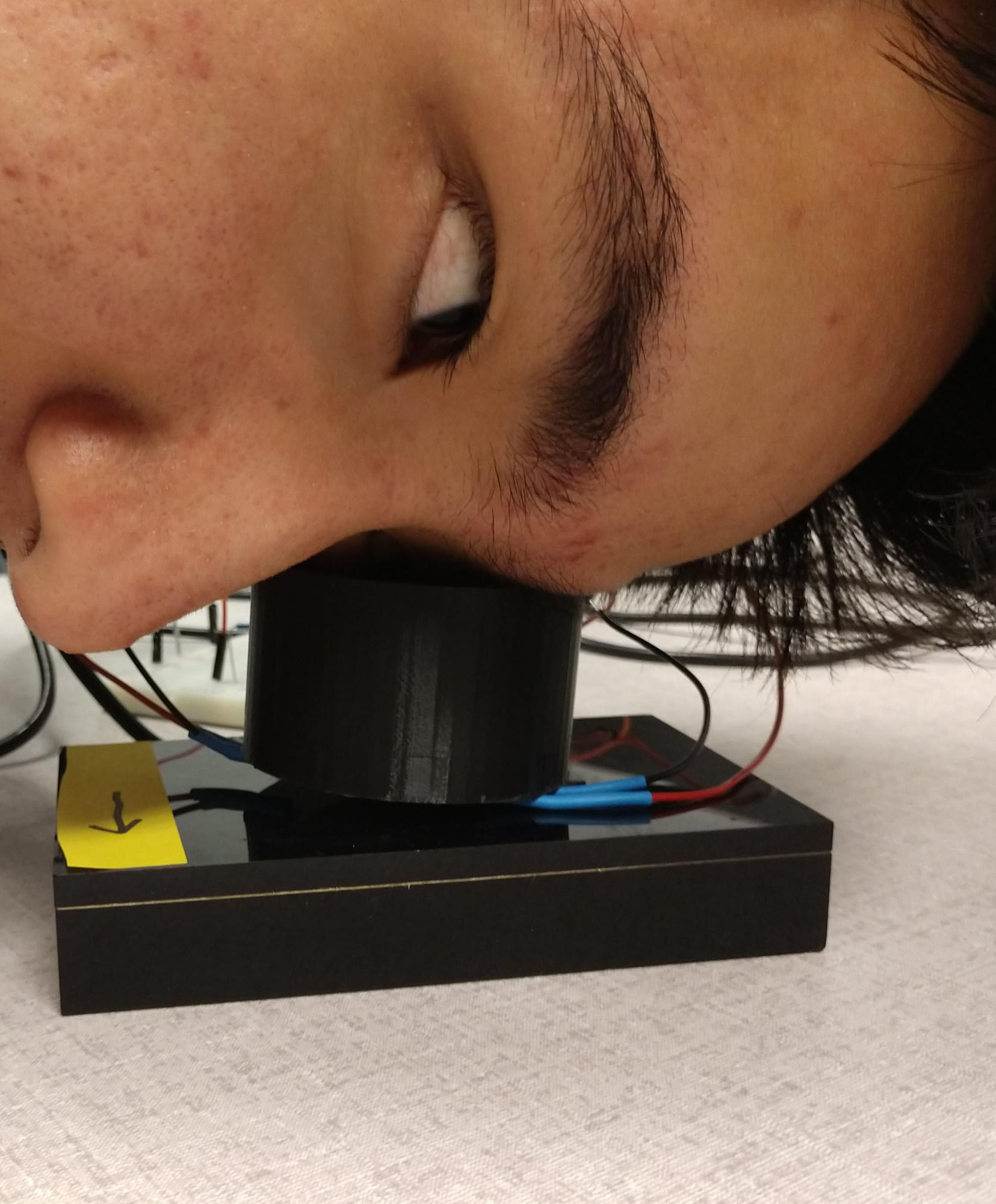

We are building an iris recognition system to greet people in the lab! Our system will take an image of your iris using a camera and infrared LEDs. Using our Python code, the image is transformed and key features are extracted. These features are compared to our iris database to determine if you are a member of the lab. Lab members will receive a special greeting… Dave may run into issues.